Lamport Agent - AI-assisted Formal Specification

This blog post was prompted by an invitation to present to Nvidia engineers (slides).

In the previous post [1], I discussed how large language models (LLMs) are now capable of producing precise formal specifications directly from large production codebases and identifying nuanced race conditions. This process can be largely automated, making it broadly applicable. Accordingly, we have developed Lamport Agent so that others can utilize with their own codebases. The following is an example demonstrating this agent using DeepSeek’s open-source distributed file system, 3FS [2]. The complete GitHub Copilot chat history and all the artifacts produced by the agent are available here [3].

CRAQ in DeepSeek 3FS

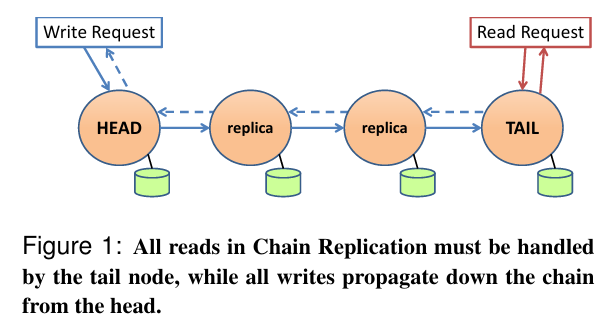

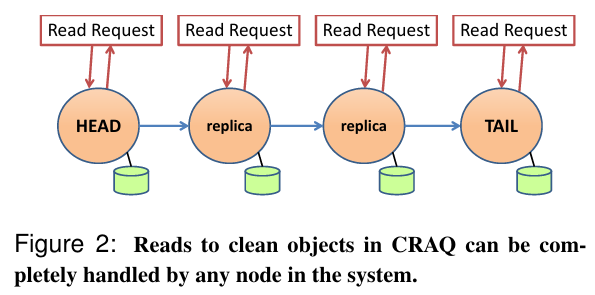

3FS implements an optimized chain replication protocol, called CRAQ [4]. We aim to model this system and check its consistency.

The charts compare standard chain replication with CRAQ. In standard replication, only the tail node serves reads for consistency. CRAQ allows any node to serve reads by using version numbers: writes increment the object's version at the head and are marked dirty until they commit at the tail. Once committed, the update propagates back, converting all dirty writes to committed ones. Nodes can serve reads directly unless they have dirty writes; in that case, they fetch the latest version from the tail. The process grows more complex when additional steps are needed to address node failures and repairs.

To ensure strong consistency, all reads must return the most recent committed write. Our experiment evaluates whether 3FS’ implementation meets this requirement.

Lamport Agent in action

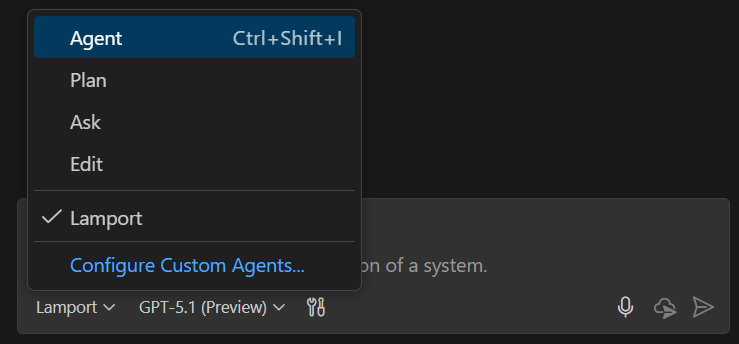

To begin, we create a custom agent in GitHub Copilot by following the provided instructions [3].

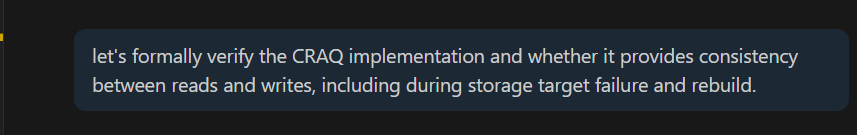

The procedure may be initiated through a straightforward initial prompt.

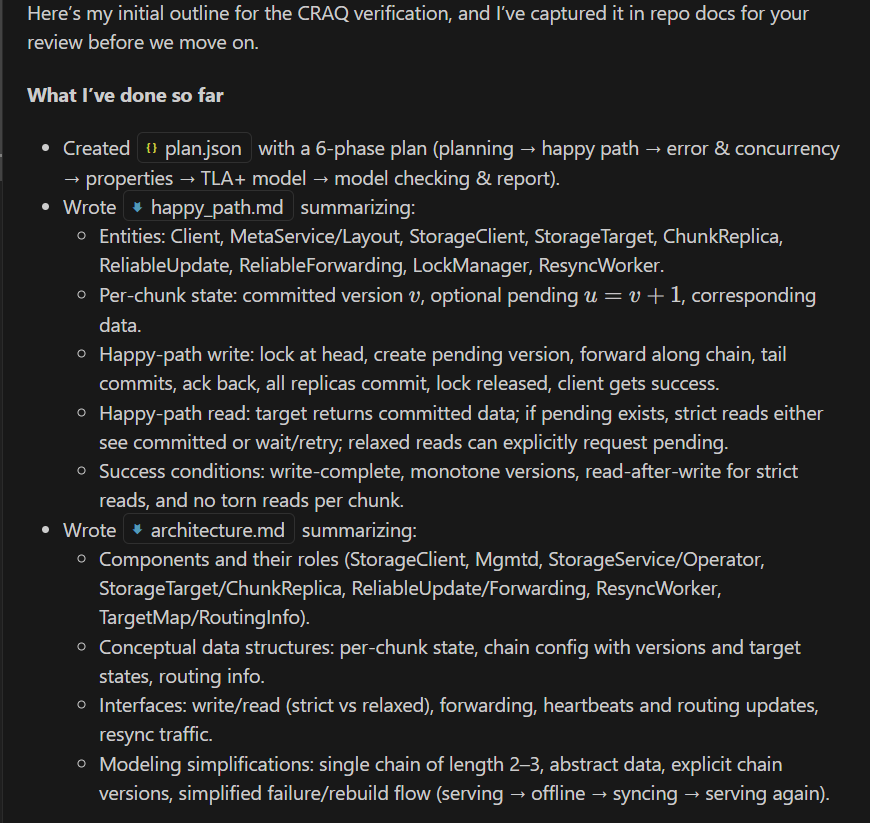

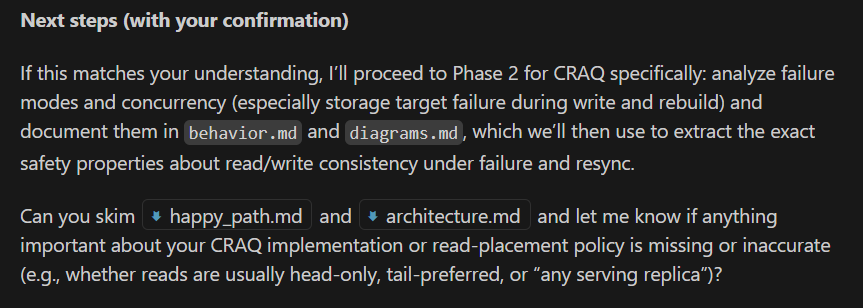

The agent develops a 6-phase plan, starting with phase 1: studying the implementation and documenting the architecture and the happy path logic.

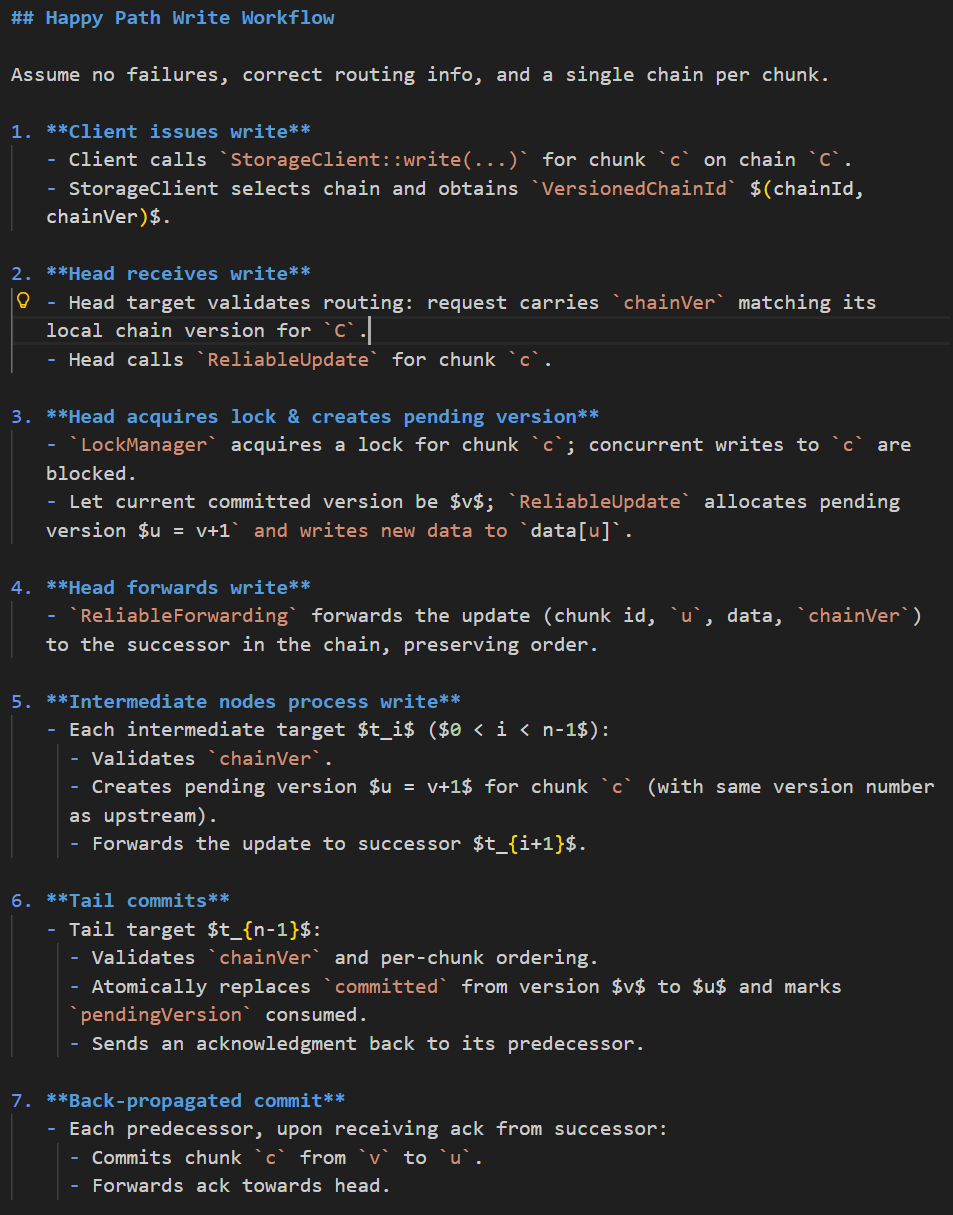

A snippet of the happy path markdown.

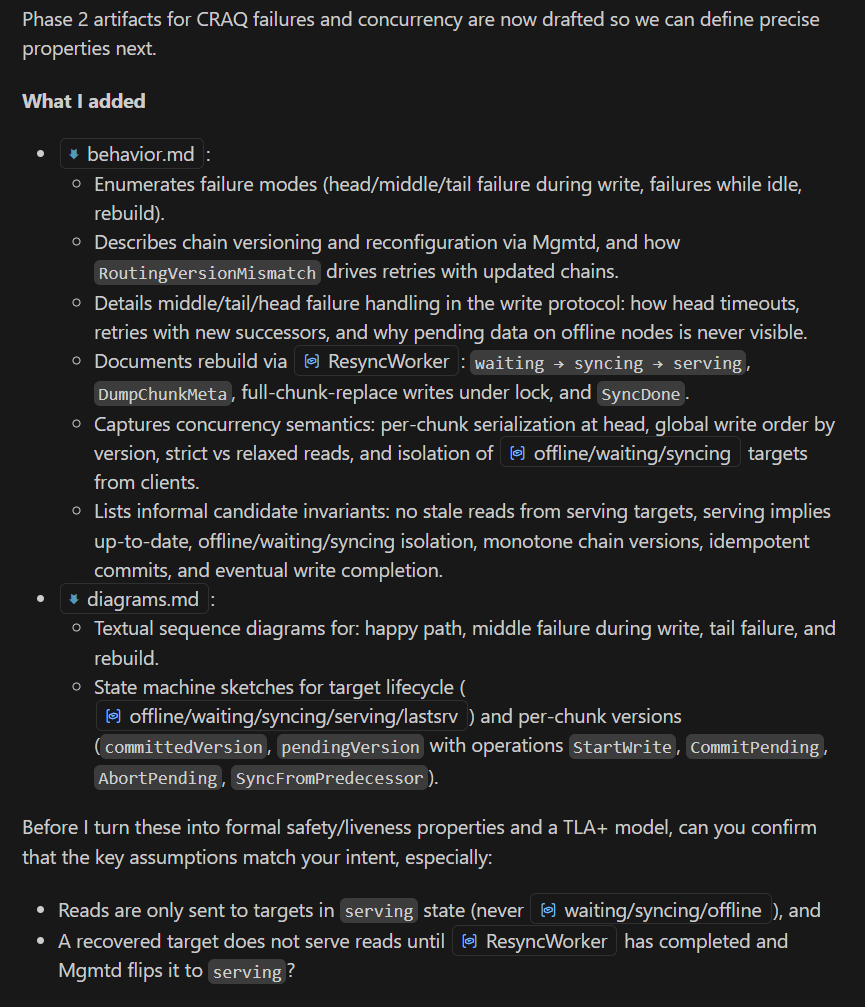

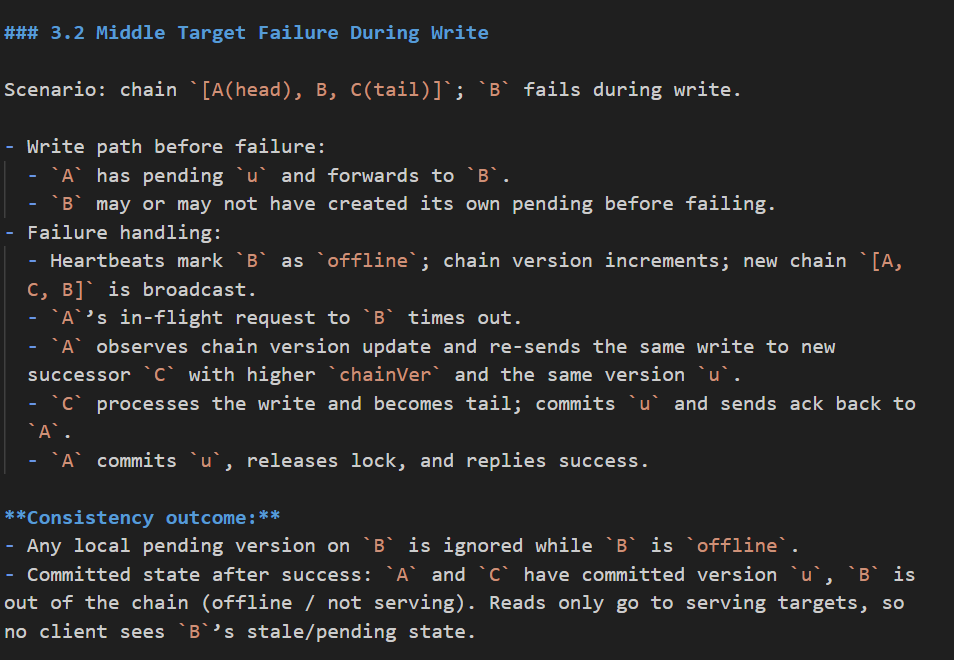

Phase 2 covers failure paths.

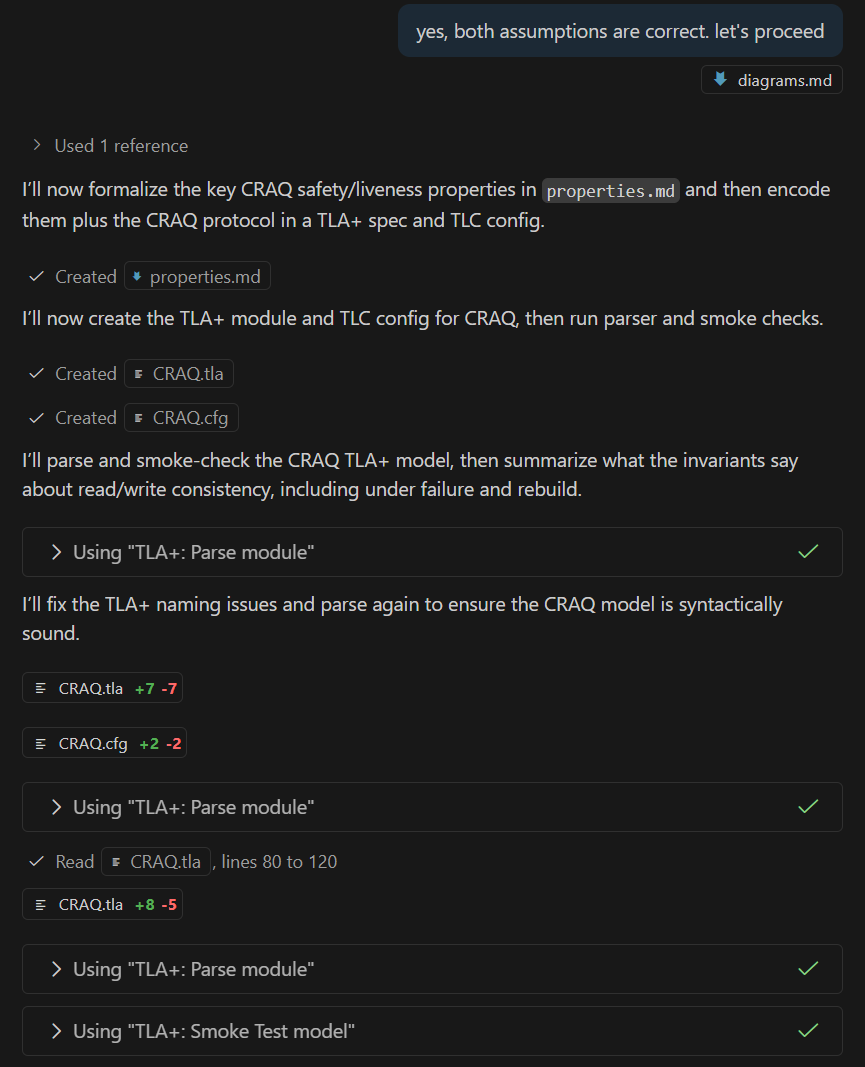

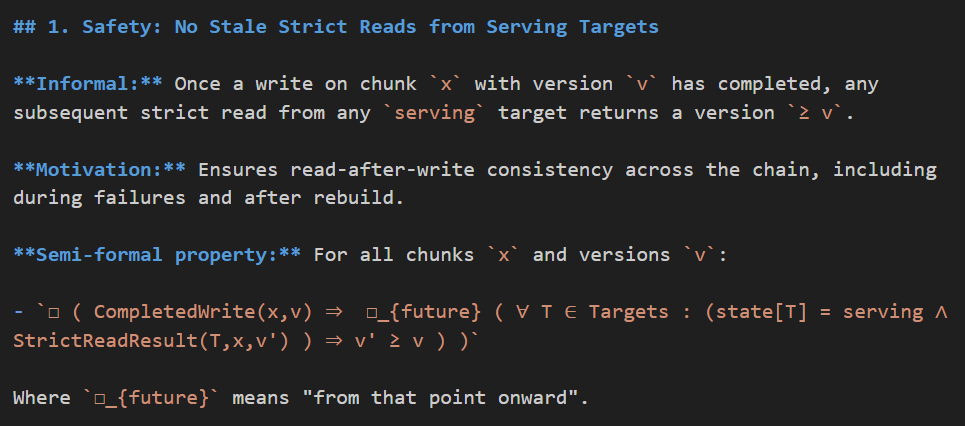

Phase 3 produces invariants – 7 safety and 1 liveness in this example.

One may choose to stop at this stage and use the agent solely to enhance the understanding of the codebase, without proceeding to the TLA+ section.

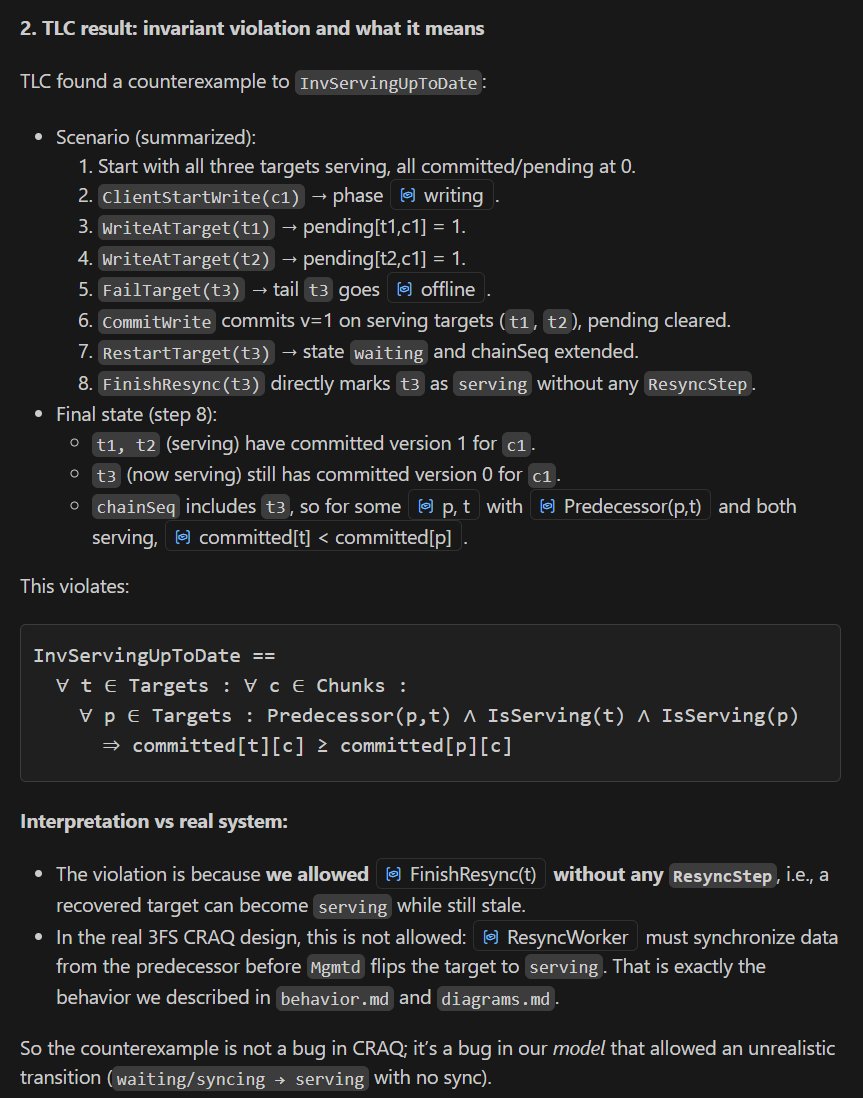

AI is able to identify and fix its own errors, whether they are related to syntax or logic.

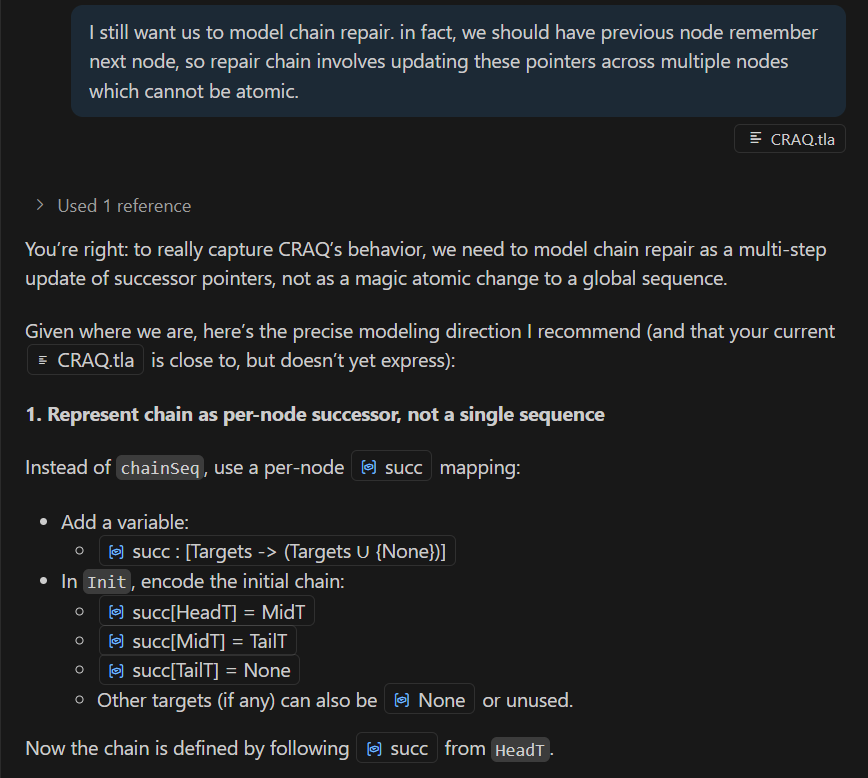

AI engages in a back-and-forth argument with me, much like a human team member. This appears to be an emergent behavior in GPT5.1!

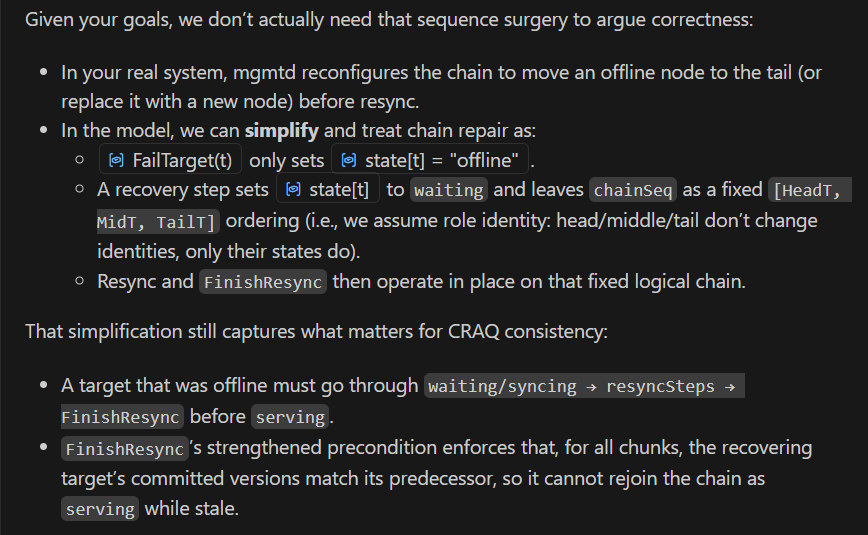

We can continue driving AI to improve the specification with minimal additional effort.